The recent advancement of generative foundational

models has ushered in a new era of image generation in the realm of natural images,

revolutionizing art design, entertainment, environment simulation, and beyond.

Despite producing high-quality samples, existing methods are constrained to generating images

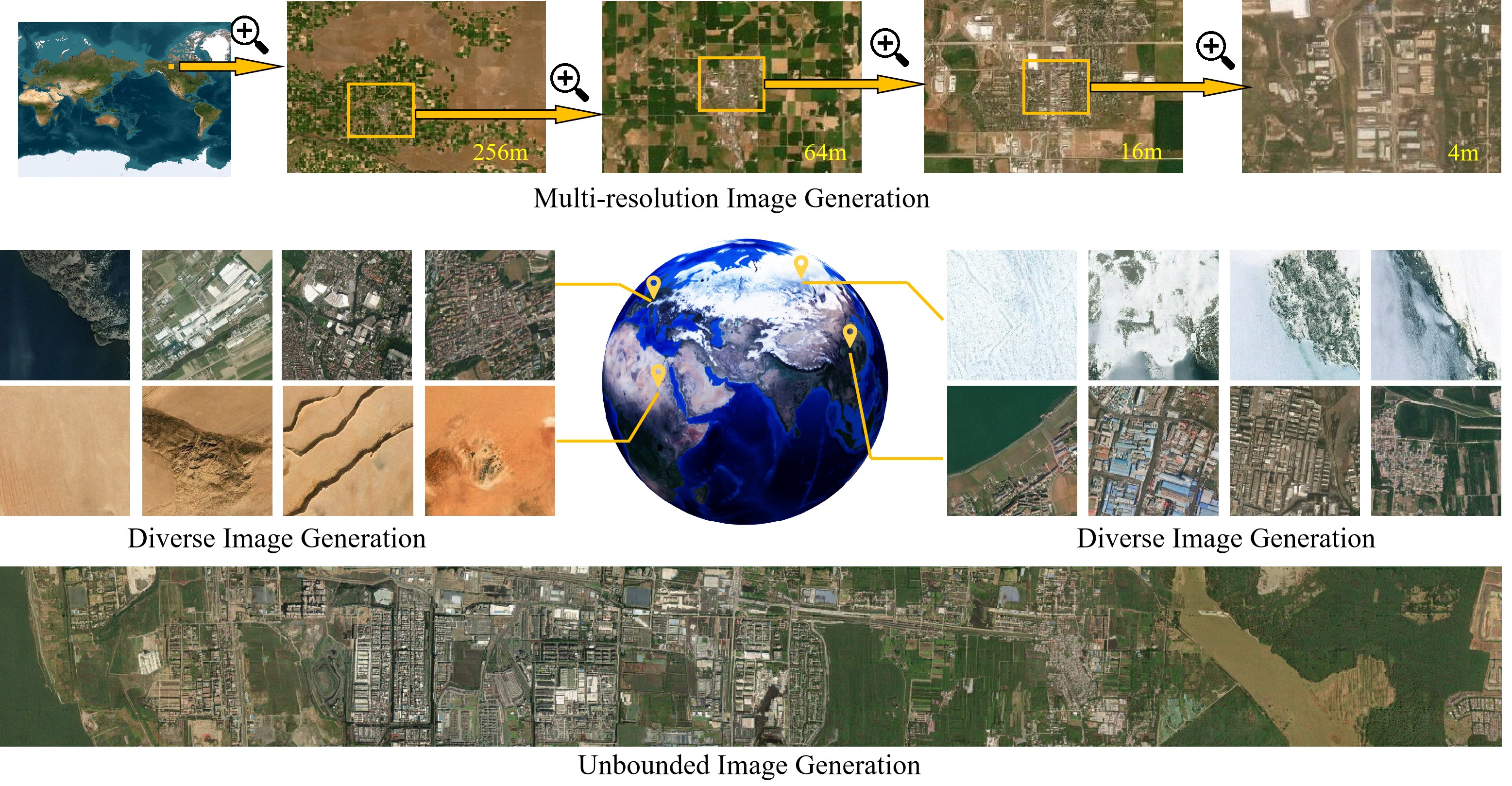

of scenes at a limited scale. In this paper, we present MetaEarth - a generative foundation model

that breaks the barrier by scaling image generation to a global level, exploring the creation of

worldwide, multi-resolution, unbounded, and virtually limitless remote sensing images.

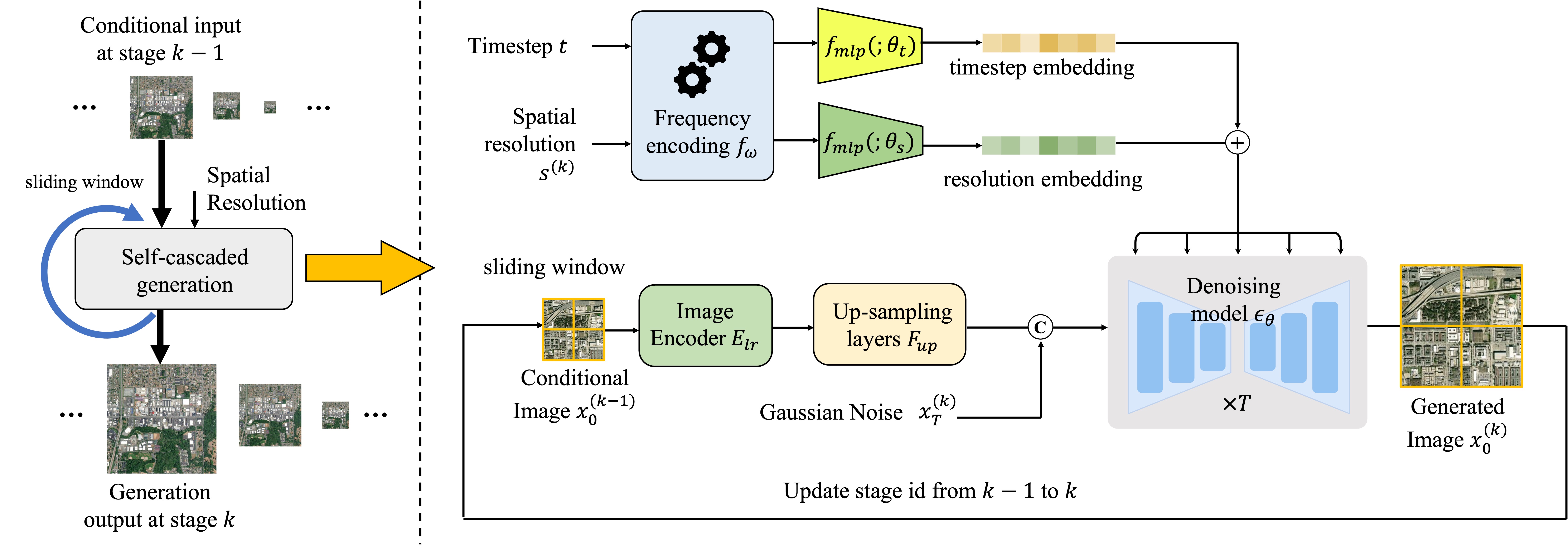

In MetaEarth, we propose a resolution-guided self-cascading generative framework,

which enables the generating of images at any region with a wide range of geographical resolutions.

To achieve unbounded and arbitrary-sized image generation, we design a novel noise sampling strategy

for denoising diffusion models by analyzing the generation conditions and initial noise.

To train MetaEarth, we construct a large dataset comprising multi-resolution optical remote sensing

images with geographical information. Experiments have demonstrated the powerful capabilities of

our method in generating global-scale images. Additionally, the MetaEarth serves as a data engine

that can provide high-quality and rich training data for downstream tasks. Our model opens up

new possibilities for constructing generative world models by simulating Earth’s visuals from

an innovative overhead perspective.

We constructed a world-scale remote sensing generative foundation model

with over 600 million parameters based on the denoising diffusion paradigm. We propose a

resolution-guided, self-cascading framework capable of

generating scenes and resolutions for any global region. The generation process unfolds in

multiple stages, starting with low-resolution images and advancing to high-resolution images.

In each stage, the generation is conditioned on the low-resolution images and their associated

spatial resolutions generated in the preceding stage.

Our MetaEarth can generate a variety of remote sensing scenes worldwide,

including glaciers, snowfields, deserts, forests, beaches, farmlands,

industrial areas, residential areas, etc.

To achieve the generation of large-scale remote sensing images

of arbitrary sizes, we propose an unbounded image generation method including

a memory-efficient sliding window generation pipeline and a noise sampling strategy.

Our proposed unbounded method can greatly alleviate visual discontinuities caused by

image block stitching, thereby achieving boundless and arbitrary-sized image

generation.

Here are some examples of high-resolution large-scale images

generated by our model (click to view the original sized images).

The self-cascading generation framework in our method enables the model to generate

images with spatial resolution diversity.

Thanks to being trained on large-scale data, our MetaEarth possesses

strong generalization capabilities and performs well even on unseen scenes. We create a

"parallel world" and use a low-resolution map of "Pandora Planet" (generated by GPT4-V) as the initial

condition for out model and then generated higher-resolution images sequentially. Despite our

training data not covering such scenes, MetaEarth is still able to generate images with reasonable

land cover distribution and realistic details.

@inproceedings{yu2024metaearth,

title={MetaEarth: A Generative Foundation Model for Global-Scale Remote

Sensing Image Generation},

author={Zhiping Yu, Chenyang Liu, Liqin Liu, Zhenwei Shi, Zhengxia Zou},

year={2024},

journal={arXiv preprint arXiv:2405.13570},

}

[Preprint]

[Preprint]

[Code]

[Code]